As our association’s network grew, we felt the need to centralize DNS entry recording and ensure their availability throughout the network. The solution needed to be easy to set up, update, and most importantly, respond quickly to changes in IP addresses.

Source

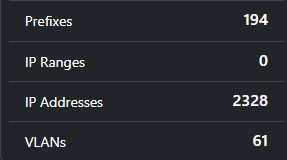

We operate Netbox as an IPAM (IP Address Management) system. All our networks and IP addresses are recorded in it:

Since the captured IP addresses are directly related to the DNS records, we decided to use Netbox as the source system for our DNS information.

On this day, the tool nx (Netbox Exporter) was born. Essentially, the tool is designed to generate static files from Netbox metadata (tags). In practice, we primarily use nx to generate BIND zone files.

Metadata

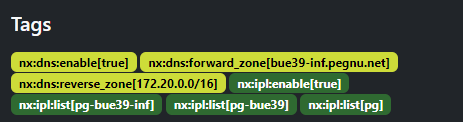

The most important metadata comes from the tags of the network prefixes:

In the image, the prefix 172.20.0.0/24 is visible, with various nx:<...> tags attached, which control nx.

More precisely, the tags ensure:

- that the zone generation of nx is activated (

nx:dns:enable[true]) - the reverse DNS entries are generated in the

20.172.in-addr.arpazone (nx:dns:reverse_zone[172.20.0.0/16]) - and that the forward DNS entries are generated in the

pegnu.netzone, withbue39-infappended to the name in Netbox (nx:dns:forward_zone[bue39-inf.pegnu.net]).

However, there is metadata that applies to multiple prefixes - for example, DNS server-specific settings, as well as the zone to DNS server assignment. For this purpose, we have created a small, additional configuration file:

{

"netbox": {

"url": "https://netbox.rack.farm/api",

"api_key": "..."

},

"namespaces": {

"dns": {

"masters": [

{

"name": "vm-ns.bue39-dmz.pegnu.net",

"ip": "172.20.4.6",

"dotted_mail": "admin.peg.nu",

"zones": [

"pegnu.net",

"16.16.172.in-addr.arpa",

"20.172.in-addr.arpa",

"7.0.8.a.8.6.1.0.2.0.a.2.ip6.arpa"

],

"includes": [

{

"zone": "pegnu.net",

"include_files": ["/etc/bind/zones/include.pegnu.net.db"]

}

]

}

]

}

}

}

Generation

Since NX is written in Golang, it made sense to use Go templates for the generation. These can also be viewed on GitHub.

Implementation details

First, the list of all network prefixes is loaded from Netbox. For all prefixes that have an nx:<...>:enable[true], the IP addresses are also loaded.

The activated generators are then called sequentially to generate the respective files. There are currently four generators: DNS zones, DNS configuration, IP list, and a (currently not particularly useful) WireGuard config generator. The respective generators are responsible for reading the necessary information from the Netbox tags.

An interesting implementation detail is the mapping of Netbox tags to Go structures. There is a “tag parser” for this, which uses Go annotations on the struct fields to decide which tag is responsible. This makes mapping the tags to a Go struct very simple:

type DNSIP struct {

IP *netbox.IPAddress

Enabled bool `nx:"enable,ns:dns"`

ReverseZoneName string `nx:"reverse_zone,ns:dns"`

ForwardZoneName string `nx:"forward_zone,ns:dns"`

CNames []string `nx:"cname,ns:dns"`

}

// ...

tagparser.ParseTags(&dnsIP, address.Tags, address.Prefix.Tags)

The code snippet above defines a struct that maps, for example, enabled to the Netbox tag nx:dns:enable. In the last line, the value of the tags is then automatically mapped to an instance of the struct using the annotations.

Generated files

The important files for the DNS infrastructure are the BIND configuration as well as the actual BIND zones with the DNS entries. A BIND configuration looks like this, for example (excerpt):

/* ACL allows remote slaves to pull the zones */

acl "nbbx-slaves-transfer" {

10.66.66.2;

192.168.5.40;

/* <...> */

};

/* masters enables the notification of remote masters */

masters "nbbx-slaves-notify" {

/* the same again... */

};

zone "66.66.10.in-addr.arpa" {

type slave;

file "/var/cache/bind/66.66.10.in-addr.arpa.db";

masters { 10.66.66.2; };

transfer-source 172.20.4.6;

};

zone "pegnu.net" {

type master;

file "/etc/bind/zones/pegnu.net.db";

allow-transfer { "nbbx-slaves-transfer"; };

also-notify { "nbbx-slaves-notify"; };

notify yes;

};

/* <...> */

At the top, the ACL and the master’s list are defined, which are generated based on the configuration file. This is followed by the actual zone bindings; you can see that this looks slightly different depending on whether the current server is a master or a slave.

A generated DNS zone looks like this, for example:

$TTL 120

@ IN SOA vm-ns.bue39-dmz.pegnu.net. admin.peg.nu (

201102641 ; serial

900 ; slave refresh interval

900 ; slave retry interval

172800 ; slave copy expire interval

600 ; NXDOMAIN cache time

)

; Nameserver

@ NS vm-ns.bue39-dmz.pegnu.net.

; Includes

$INCLUDE /etc/bind/zones/include.pegnu.net.db

; Name Type RData

gw-bue39.wg8-wal27 A 172.16.16.157

gw-wal27.wg8-wal27 A 172.16.16.158

fw-ng.bue39-inf A 172.20.0.1

fw-ng.bue39 CNAME fw-ng.bue39-inf

/* <...> */

The SOA information at the top is currently set statically in the program code. However, it would also be possible to adjust this via the configuration file.

Another special feature is the $INCLUDE, which can be seen before the DNS entries. It ensures that an external zone file is included. The reason for this is that we do not have the requirement to generate everything with NX, but only the most frequent cases. For things like SRV or TXT entries, you currently have to make do with the include file.

Further down, you can also see a CNAME entry; this was also specified via a Netbox tag - this is currently the highest of the priorities, apart from A, AAAA, PTR.

Distribution

We wanted the zone files to be available even if a VPN connection goes down or the location where the zone files are generated is not online. That’s why we store the zone files in an Azure Blob Storage account. However, these are not publicly accessible - that was not the intention.

Access is controlled using Shared Access Signatures (SAS). They allow us to generate temporary access links that can be used to retrieve the zone files. These access links are generated by an Azure function, which the client then uses to retrieve the zone files. The idea behind this is that we don’t have to expose the SAS keys to the client but can control access centrally. In the future, we could also use our existing identity provider to authenticate the client.